{tocify} $title={Table of Contents}

Day by day the amount of data collected by any business/enterprise is growing - both the historical and transactional. But is there any need to keep them? Is it useful to business in any way? -- Yes, the data which is collected so far or would be collected on going can be used to deduce value by process of analyzing data and presenting actionable information to help executives, managers and other corporate end users to make informed business decisions.(assisting Business Intelligence)

Like it is said Information when processed on turns into Knowledge.

To make this possible Raw Data(information) --> Transformed data(knowledge), Microsoft introduced data integration service i.e. Azure Data Factory which is capable of extracting-transformation-loading (ETL) or extracting-loading-transformation(ELT), for transformation activities either of following can be used - HDInsight, Data Bricks, Azure Data lake U-SQL, Machine Learning, stored procedures etc and is managed by Microsoft.

Each business/entity have there own way of storing the data (data stores), some prefer to store on premises, some on cloud, some on file system, some in different database etc.

There is three way you can trigger pipeline - manually or Scheduled based and Event Based(example -the deletion of a file, in your Azure Storage account) .

Scheduled based will invoke automatically based on your scheduled time. It can be set to execute on daily, weekly, monthly basis (scheduled ) or can be set on time intervals of fixed size (Tumbling window)

The steps in pipelines are termed as Activities and can be Data movement activities, Data transformation activities (Data bricks),Control activities (Lookup activity, if conditions, wait activity,for each etc).

Below is small demonstration to help getting started with Azure data Factory.

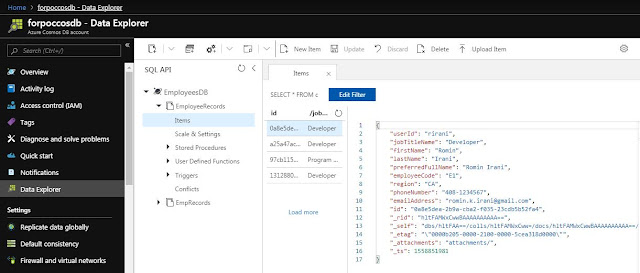

To keep it simple have created collection with four records only (In reality there would be lot of data, upto millions of records )

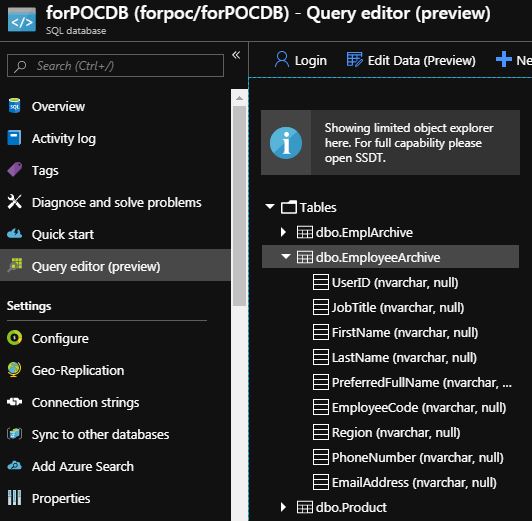

To keep it simple have created table with column names matching to that of fields in Records of cosmos db. I made small mistake here which led to failure in Pipeline run.

In summary page, verify the details and if any changes are to be done then Edit option can be used.

Click on Finish, that's it, pipeline is ready, save it , publish it and test.

For testing I triggered the pipeline manually.

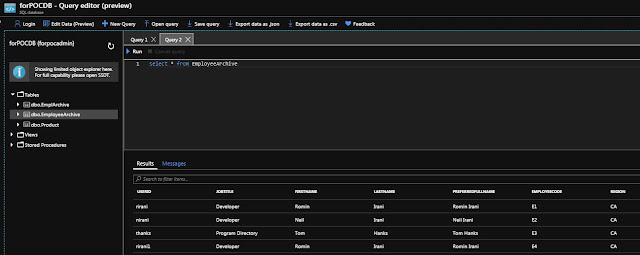

After the pipeline run succeeded, entries were found in the SQL DB

Why and What is Azure Data Factory

Day by day the amount of data collected by any business/enterprise is growing - both the historical and transactional. But is there any need to keep them? Is it useful to business in any way? -- Yes, the data which is collected so far or would be collected on going can be used to deduce value by process of analyzing data and presenting actionable information to help executives, managers and other corporate end users to make informed business decisions.(assisting Business Intelligence)

Like it is said Information when processed on turns into Knowledge.

To make this possible Raw Data(information) --> Transformed data(knowledge), Microsoft introduced data integration service i.e. Azure Data Factory which is capable of extracting-transformation-loading (ETL) or extracting-loading-transformation(ELT), for transformation activities either of following can be used - HDInsight, Data Bricks, Azure Data lake U-SQL, Machine Learning, stored procedures etc and is managed by Microsoft.

Each business/entity have there own way of storing the data (data stores), some prefer to store on premises, some on cloud, some on file system, some in different database etc.

There is currently support for more than 72 data store to which ADF can connect to and extract data.

Like Logic Apps, where we have provision to connect services which are cloud based or also on premises (integrating various services) like wise Azure Data factory can connect to various types of data stores which are cloud based or also on premises.

Like Logic Apps, where we have provision to connect services which are cloud based or also on premises (integrating various services) like wise Azure Data factory can connect to various types of data stores which are cloud based or also on premises.

You get a browser based designer (also available in visual studio), where you can design the Pipeline (data driven workflow) by setting the Trigger (the way to start the workflow) and then selecting the appropriate activity or series of activities.

You have provision to debug and validate the workflow, once done you publish the workflow, it gets deployed – ready to use. you are charged only when it is executed - based on the number of activities that are run, the volume of data that is moved etc.

Building Blocks of Azure Data Factory

TRIGGERS in ADF

There is three way you can trigger pipeline - manually or Scheduled based and Event Based(example -the deletion of a file, in your Azure Storage account) .

Scheduled based will invoke automatically based on your scheduled time. It can be set to execute on daily, weekly, monthly basis (scheduled ) or can be set on time intervals of fixed size (Tumbling window)

CONNECTIONS in ADF

The way to connect to data store and establishing a way to allow data movement to and fro is done via Integration Runtime and Linked Services.

Integration runtime acts as a Data gateway/medium (on premises to cloud, cloud to cloud) whereas Linked Services are connection to data sources and destinations.

Linked services are connection strings much like bindings in function App and Connectors config in Logic App. Thus Linked Service runs in scope of Integration Runtime.

Currently three types of IR are supported

- Azure (Within Azure)

- Self-hosted (On premises)

- Azure-SSIS (dedicated for SSIS package)

ACTIVITIES in ADF

The steps in pipelines are termed as Activities and can be Data movement activities, Data transformation activities (Data bricks),Control activities (Lookup activity, if conditions, wait activity,for each etc).

You can initiate with any of the activity based on requirement. Below is how they are categorized

DATASET in ADF

Datasets represent the way data is present inside the data store i.e. data structures within the data stores .

Thus an input dataset represents the input which will be used and an output dataset represents the output which will be produced by an activity.

PIPELINE in ADF

It is here where all the components discussed above are used to design the data workflow with help of an activity or group of activities, like from where data is to be collected, how it is to be collected, what is to be done with data.

So it is a logical grouping of activities or a scope of the tasks.

MONITORING PIPELINE in ADF

Inbuilt support for monitoring the pipeline runs is provided out of box, also details on Activity level can be checked and alerts can be set on top of it.Scenario

For demo I am using simple scenario - say a business wants to archive the transactional data from the application database (where data is retained for few days only and then after gets deleted). As business needs the data for further processing, it wants it to be stored somewhere i.e. move data from application database to archive database periodically.Here I have used Cosmos db as application database and Azure SQL database as the archive database.

Steps in creating solution

Create Azure Cosmos DB -- Source

To keep it simple have created collection with four records only (In reality there would be lot of data, upto millions of records )

Create Azure SQL DB -- Destination

To keep it simple have created table with column names matching to that of fields in Records of cosmos db. I made small mistake here which led to failure in Pipeline run.

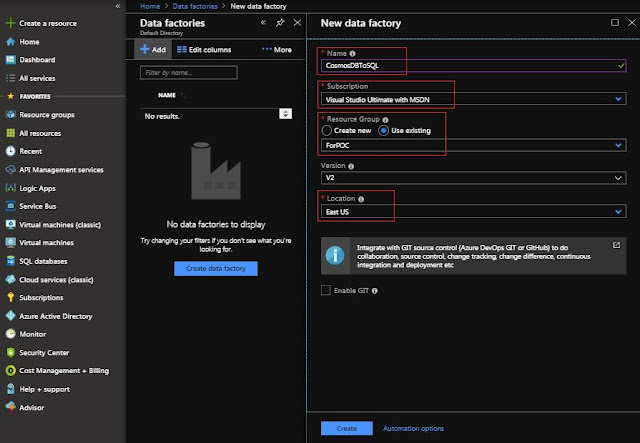

Create Azure Data Factory

Provide unique name to Data Factory instance to be created as per purpose , select the subscription, resource group, version and location.

Note that currently only limited locations are available to choose from.

Note that currently only limited locations are available to choose from.

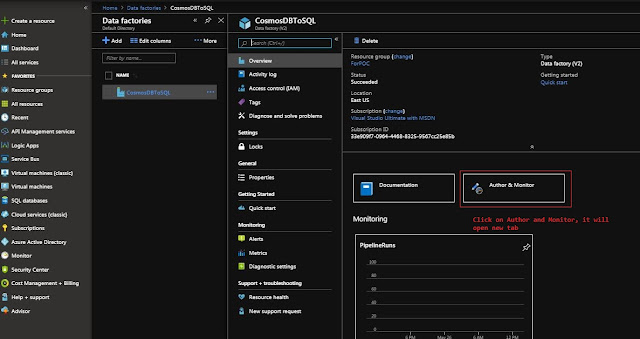

After creating an instance of Data Factory, you need to click on Author and Monitor - which will lead to ADF designer portal (dev env) which opens in separate tab.

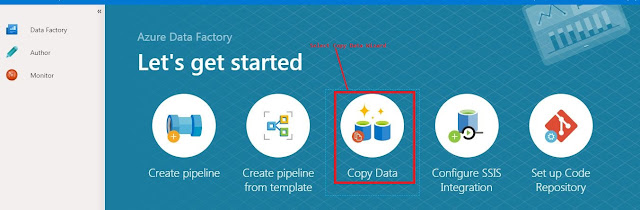

The new tab opens with options to get started with. Either of first two can be used to create pipeline and but as we need to create a pipeline to copy data so selected the Copy Data Wizard(it creates a pipeline with Copy data activity)

Give a descriptive name and select the Task schedule, as I want to run it manually once , Run once now is selected.

Give a descriptive name and select the Task schedule, as I want to run it manually once , Run once now is selected.

Now we need to provide the data store from where data is to be extracted and for that we need to create new connection (Linked Service)

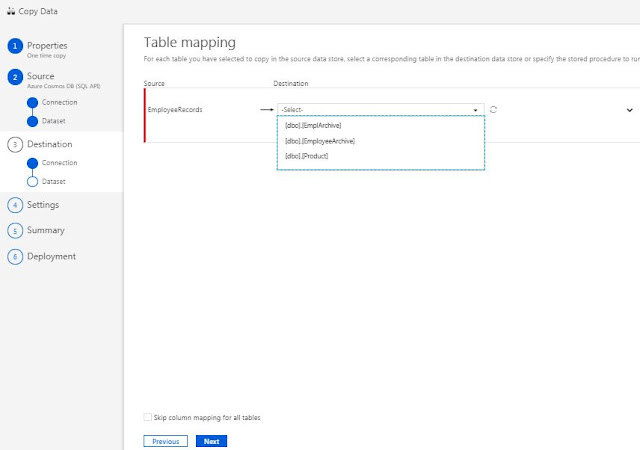

Next is to create connection with Destination , select Azure SQL database

EmployeeArchive table is selected as destination as data is to be copied to it

As data source is Cosmos DB which is SQL API based, create linked service by selecting Azure Cosmos DB (SQL API)

Next provide name to the Linked Service, select the Runtime . As my data store is in Cloud itself , AutoResolveIntegrationRuntime is selected.

If source data store would have been on Premises, then Integration Runtime agent had to be installed on the onPremises server and that would have been used as gateway.

For Connection String, as it is same subscription, From Azure Subscription is selected(it automatically fetches the keys/connection string), else we can also enter manually.

Select the CosmosDB account and Database which is to be connected, and click on Test connection and Finish.

Once connection is tested, create a dataset

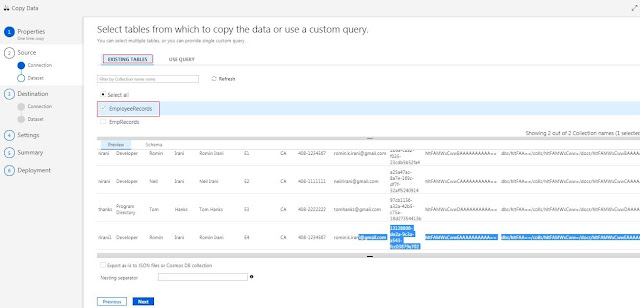

Here Table data is to be fetched directly so EmployeeRecords table is selected from Existing tables, else query can also be used to fetch the data

Next is to create connection with Destination , select Azure SQL database

Provide the details like server name, database name, login details -- here too it is on Azure and same subscription

With presents with column mapping for creating Destination Dataset, you can make changes select/unselect here or continue.

As for this demo, I kept it simple and same - one to one mapping

Select what is to be done if any exception occurs in Pipeline -- You can abort and fail the copy activity when incompatible data is encountered (default behavior) or You can continue to copy all of the data by adding fault tolerance and skipping incompatible data rows (also errors can be logged).

Click on Finish, that's it, pipeline is ready, save it , publish it and test.

Testing

After the pipeline run succeeded, entries were found in the SQL DB

Summary

- It is the cloud-based data integration service (ETL)

- It allows you to create and schedule data-driven workflows(pipelines) for orchestrating data movement and transforming data at scale, that can ingest data from disparate data stores .

If you have questions or suggestions, feel free to do in comments section below !!!

Do share if you find this helpful ....... Knowledge Sharing is Caring !!!!!!

- It is the cloud-based data integration service (ETL)

- It allows you to create and schedule data-driven workflows(pipelines) for orchestrating data movement and transforming data at scale, that can ingest data from disparate data stores .

Knowledge Sharing is Caring !!!!!!

Learn More about Azure Data Factory

- Getting started with ADF - Loading data in SQL Tables from multiple parquet files dynamically

- Getting started with ADF - Creating and Loading data in parquet file from SQL Tables dynamically

- Getting Started with Azure Data Factory - Insert Pipeline details in Custom Monitoring Table

- Getting Started with Azure Data Factory - CopyData from CosmosDB to SQL

Tags:

Azure Data Factory